Imagine a world where machines can learn and evolve, much like the human brain. A world where artificial intelligence can decipher complex patterns, process vast amounts of data, and make predictions with astonishing accuracy. This is not a distant dream, but a reality that is unfolding before our eyes thanks to neural network technology.

So, what exactly are neural networks, and how do they function? In this comprehensive guide, we will delve into the fascinating world of the artificial intelligence technique, neural networks, explore their structure, and investigate their various applications, from image recognition to natural language processing.

Short Summary

- Neural networks are a subset of artificial intelligence that emulates the functioning of neurons in the human brain, used for various applications such as image recognition and natural language processing.

- Deep neural networks involve two or more layers of processing with multiple hidden layers between input and output, allowing them to model complex data and learn from it.

- Neural network algorithms enable real world tasks such as facial recognition, autonomous driving, medical diagnosis, etc., demonstrating their transformative potential.

Exploring Neural Networks

Neural networks, also known as artificial neural networks (ANNs), are a subset of artificial intelligence (AI) that simulates the functioning of neurons in biological neural network in the human brain. They are composed of interconnected nodes (artificial neurons) that process and learn from input data, much like the biological neural systems they are modeled after.

Neural networks have the remarkable ability to recognize patterns and make predictions based on training data, making them a powerful tool in various AI applications, including image recognition, natural language processing, and even autonomous driving.

The Science Behind Neural Networks

Deep learning, a branch of AI, involves the utilization of artificial neural networks composed of interconnected layers of artificial neurons. These networks are trained using large datasets and algorithms to identify patterns and make predictions. Each neuron within the deep learning network first processes a designated input and passes the processed information to the subsequent neuron. This procedure is repeated until the output is generated, allowing the network to recognize patterns and make predictions.

So, how do these artificial neurons collaborate to learn and make predictions? The answer lies in the intricate algorithms that condition the most basic neural network used to discern correlations between large amounts of data.

Neural network algorithms are a set of mathematical rules and procedures that train and operate neural networks, imitating the operations of an animal brain to discern correlations between large amounts of data.

One example of such an algorithm is the multi-layered perceptron (MLP), which consists of interconnected layers of input, hidden, and output nodes.

The hidden layers optimize the input node weightings until the neural network's margin of error is minimized, allowing the network to identify relevant features in the input data indicative of the outputs.

Neural Network Algorithms

The algorithms employed in neural networks play a crucial role in their learning capabilities. One popular algorithm, the multi-layered perceptron (MLP), is a type of neural network composed of interconnected layers of the input nodes, hidden, and output nodes. The input layer gathers information from its environment.

The output layer then uses the input to classify or produce signals that correspond to the input patterns. The hidden layers are used to refine the input weightings until the margin of error of the neural network is minimal. This process can be likened to feature extraction, providing a similar benefit to statistical techniques such as principal component analysis.

Neural network algorithms encompass various learning types, such as supervised, unsupervised, and reinforcement learning. These algorithms enable neural networks to recognize patterns in data, discern correlations between disparate elements of data, and apply this knowledge to make predictions or decisions.

By continuously adjusting the weights and biases in the network, the algorithms allow the neural network to learn from its training examples and improve its performance over time.

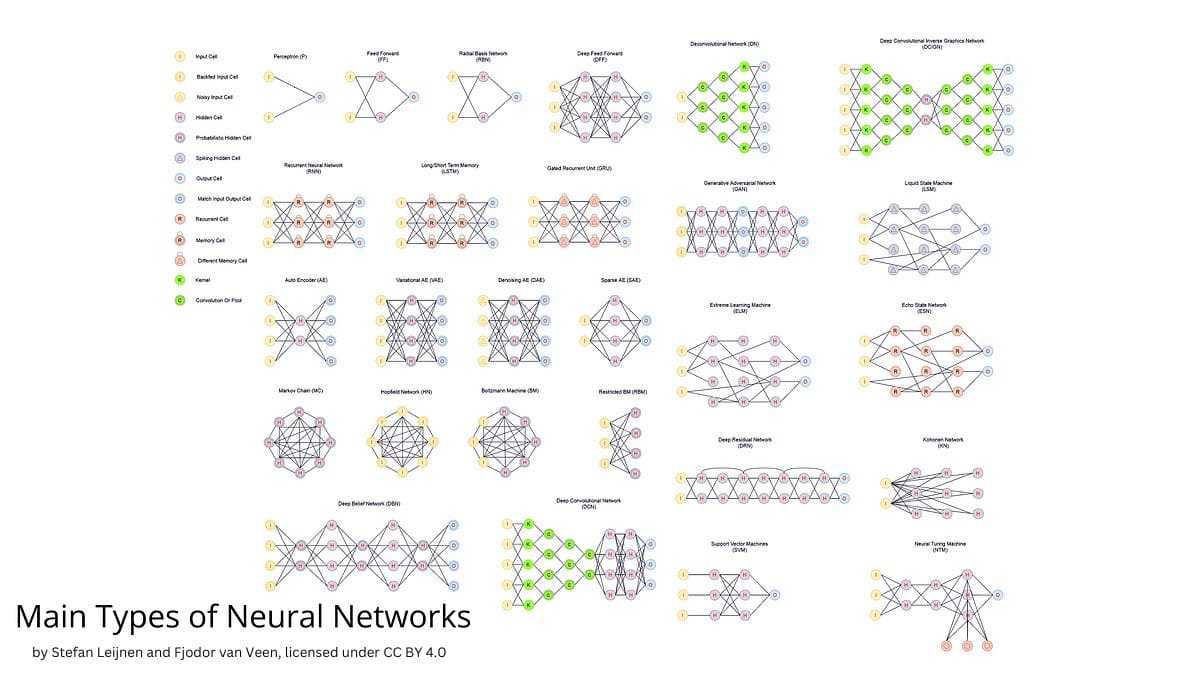

Types of Neural Networks and Their Applications

There are various types of neural networks, including feedforward neural networks, recurrent neural networks, and convolutional neural networks, each with its unique set of applications. The distinctions between these types of neural networks work lie in the manner in which they process data and the types of tasks for which they are most suitable.

For instance, feedforward neural networks are most appropriate for classification tasks, whereas recurrent neural networks are most suitable for sequence-based tasks. Convolutional neural networks, on the other hand, are best suited for image recognition tasks.

Let's take a closer look at each of these types of neural networks and their respective applications.

Feedforward Neural Networks

A feedforward neural network is a type of artificial neural network where the nodes' connections do not form a loop. It is also referred to as a multi-layer neural network, as all information is only passed in a forward direction. This type of feed forward neural networks or network is mainly utilized for supervised learning in situations where the data to be learned is neither sequential nor time-dependent.

One example of a feedforward neural network is the multilayer perceptron (MLP), a class of ANNs that consists of at least three layers, including an input, a hidden, and an output layer. The MLP is a powerful machine learning algorithm capable of distinguishing nonlinearly separable data. Its training process is based on the backpropagation learning algorithm.

Feedforward neural networks are typically utilized for facial recognition technologies. Their ability to learn intricate patterns, scalability, and capacity to generalize make them a powerful tool in this domain. However, they do have some limitations, such as their inability to learn temporal patterns and their vulnerability to overfitting.

Recurrent Neural Networks

Recurrent neural networks (RNNs) are a type of neural network which is more complex than others. The output from a node is fed back into the network, making it a closed loop system. This allows the network to theoretically "learn" and enhance its performance by retaining past processes and reutilizing them in the future while processing.

RNNs are particularly well-suited for tasks that involve sequences or time-dependent data. One notable application of RNNs is in text-to-speech technologies. By processing and learning from sequential data, RNNs can generate speech output that closely resembles human speech patterns. Their ability to process sequential data also makes them a valuable tool in natural language processing and other sequence-based tasks.

Convolutional Neural Networks

Convolutional neural networks (ConvNets or CNNs) are a type of neural network that possesses multiple layers in which data is organized into distinct categories. These networks are particularly advantageous for image recognition applications, as they can efficiently process and learn from large volumes of image data.

Deconvolutional neural networks, on the other hand, are employed to identify elements that could have been identified as significant through a convolutional neural network. The ability of convolutional neural networks to process image data and recognize patterns in it has led to their widespread adoption in computer vision applications.

From image classification and object detection to facial recognition and image captioning, CNNs have revolutionized the way we process and understand visual information.

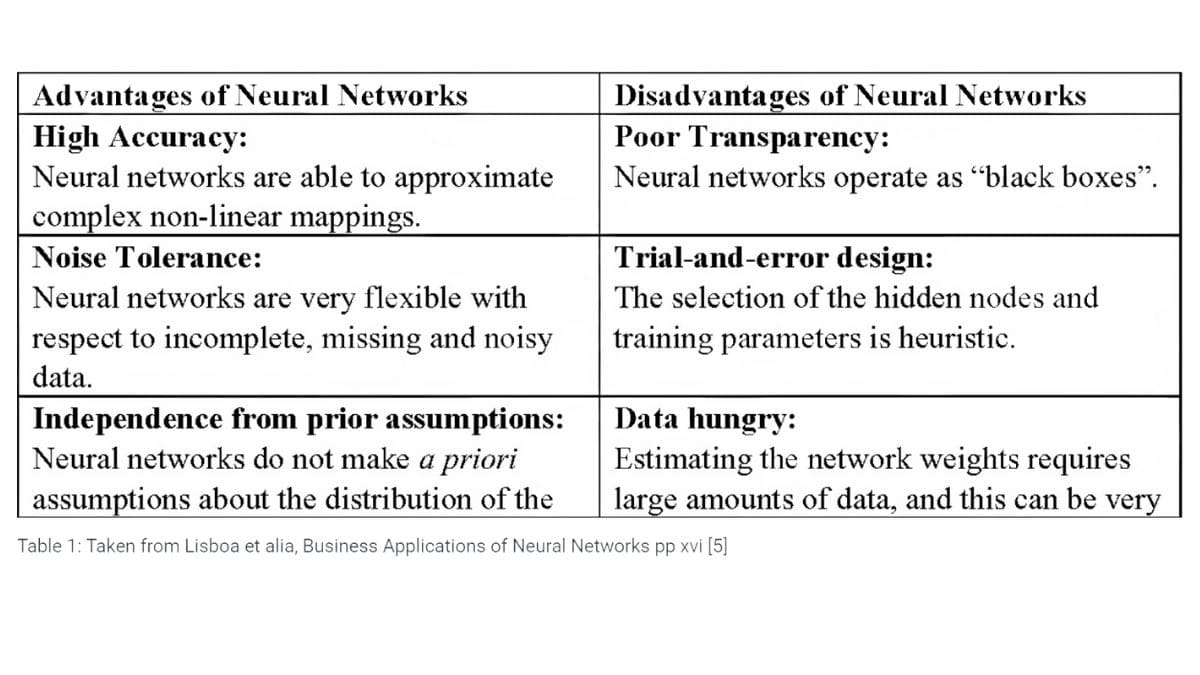

Advantages and Disadvantages of Neural Networks

Neural networks offer numerous advantages over traditional algorithms, such as their ability to learn from prior outputs, process intricate data, and make accurate predictions. However, they also come with some inherent drawbacks, such as their significant hardware requirements, lengthy development times, and the challenge of auditing their operations.

In this section, we will delve deeper into the pros and cons of neural networks, shedding light on their strengths and limitations.

Pros of Neural Networks

One of the key advantages of neural networks is their ability to learn from data, solve complex problems, and detect patterns that traditional algorithms may not be able to. Neural networks can learn from data through the use of algorithms tailored to recognize patterns in the data, discerning correlations between the data point or points and employing this information to make predictions or decisions.

Furthermore, neural networks are capable of generalizing, which means they can detect patterns in data and make predictions or decisions based on data they have or historical data not previously encountered. This independent learning capability allows neural networks to generate output not restricted to the initial input, enabling them to adapt and improve over time.

Cons of Neural Networks

Despite their numerous advantages, neural networks are not without their drawbacks. One of the most notable challenges is their "black box" nature, which makes it difficult to explain and interpret their operations.

Neural networks consist of multiple layers of interconnected neurons, and the exact manner in which they interact is not always evident, making it challenging for researchers and practitioners to understand and audit their performance.

Another drawback is the high computational requirements of neural networks, which necessitate large amounts of data for training and significant processing power. They also require considerable time for training, as they must be trained on extensive datasets to obtain accurate results.

Moreover, neural networks are sensitive to the initial randomization of weight matrices, which can result in inaccurate outcomes if the weights are not appropriately initialized. This can present a challenge when training a neural network, as the weights must be precisely adjusted to obtain the desired results.

Components and Structure of Neural Networks

The basic architecture of a neural network comprises interconnected artificial neurons organized into three layers: the input layer, the processing layer, and the output layer. These layers work in tandem to process and learn from input data, ultimately producing the final output or prediction.

In this section, we will explore each of these layers in greater detail, shedding light on their roles and functions within the whole neural network architecture.

Input Layer

The input layer of a neural network is the first layer of nodes in neural nets that receive input data from external sources.

This layer serves as the initial point of contact between the neural network and the outside world, obtaining numerical data in the form of activation values.

The input layer plays a crucial role in the overall functioning of the next layer of the neural network, as it is responsible for processing and passing the input data to the subsequent layers.

Processing Layer

The processing layer of a neural network is the layer wherein the computation and transformation of input data takes place. This layer consists of one or more hidden layers, which are responsible for refining the input weightings and identifying relevant features in the input data.

The hidden layers use activation functions to determine whether to forward the signal or not, based on the neuron's output. By adjusting the weights and biases in the network, the processing of hidden layer also plays a significant role in the learning capabilities of the neural network.

Output Layer

The output layer is the last layer of neurons in a neural network, responsible for producing the final prediction or classification of the input data. This layer can have one output node or multiple nodes, depending on the specific application of the neural network.

The output layer provides the ultimate outcome of all the data processing conducted by the artificial neural network, transforming the processed information into meaningful results.

The cost function is employed to calculate the disparity between predicted and actual values, allowing the neural network to learn from its mistakes and improve its performance over time.

Deep Neural Networks: The Next Level of AI

Deep neural networks represent the next level of artificial intelligence, harnessing the power of interconnected nodes and layered structures to process data in increasingly intricate ways.

These advanced networks are capable of learning from prior outcomes and evolving over time, enabling them to tackle complex tasks such as image recognition and natural language processing with remarkable accuracy.

In this section, we will delve deeper into the world of deep neural networks, exploring their defining characteristics and the numerous applications of computer science they have revolutionized.

Defining Deep Neural Networks

A deep neural network involves two or more layers of processing, each layer responsible for a distinct task. These deep learning networks are a subset of machine learning and bear resemblance to the biological structure of the brain. Deep neural networks consist of multiple hidden layers between the input and output layers, allowing them to model complex data and learn from it.

Deep neural networks progress by comparing predicted outcomes to actual outcomes and adjusting future estimations. This continuous learning process enables deep neural networks to improve their performance over time, making them an invaluable tool in various AI applications.

Applications and Future Potential

Deep neural networks are utilized in a wide range of applications, including image recognition, natural language processing, and autonomous vehicles. For instance, convolutional neural networks have revolutionized computer vision tasks such as object detection, image segmentation, and image classification.

In the realm of natural language processing, deep neural networks have enabled advancements in text classification, sentiment analysis, machine translation, and question answering. Moreover, deep neural networks have also found applications in speech recognition, robotics, and medical diagnosis, showcasing their versatility and potential for future advancements.

As AI continues to evolve, the potential applications of deep neural networks are seemingly limitless. From enhancing medical diagnostics to enabling safer autonomous transportation, deep neural networks have the power to transform virtually every aspect of our lives, opening the door to a world of possibilities.

Real-World Examples of Neural Network Applications

Real-world examples of neural network applications are abundant, demonstrating the transformative power of these advanced technologies. For instance, convolutional neural networks are widely employed in facial recognition and image processing technologies, enabling accurate identification and analysis of visual data.

In the automotive industry, neural networks are being employed in autonomous vehicles to enable them to render decisions based on the data they receive from their environment. In the medical field, neural networks are being employed in medical diagnosis to assist physicians in making more precise diagnoses.

By processing vast amounts of medical data and detecting patterns, neural networks can help identify potential health issues and suggest appropriate treatments.

From image recognition to natural language processing, neural networks are revolutionizing the way we solve complex problems and make sense of the world around us.

Summary

In conclusion, neural networks represent a powerful and transformative technology with the potential to revolutionize various aspects of our lives. From image recognition and natural language processing to medical diagnosis and autonomous vehicles, neural networks have proven their effectiveness in solving complex problems and making accurate predictions.

As artificial intelligence continues to advance, the potential applications of neural networks are seemingly limitless, opening the door to a world of possibilities.

By understanding the inner workings, advantages, and limitations of neural networks, we can harness their potential to drive innovation and tackle some of the most pressing challenges of our time. The future of artificial intelligence is undoubtedly intertwined with the progress of neural networks, and as we continue to explore their capabilities, we move closer to realizing the full potential of this remarkable technology.